Conversational AI has moved past the experiment phase. Most teams have proven the basics: bots handle volume, resolve routine queries, and reduce frontline load.

But as organizations push toward higher automation rates, they hit a familiar wall. The challenge is no longer capability, it’s confidence. When AI becomes a primary brand ambassador, leaders need to know that someone is watching when conversations turn complex, emotional, or unpredictable.

This is where Human-in-the-Loop starts being a safety net and an operating model.

AMCC, the Agent-Monitored Contact Center, is built to give AI autonomy with human oversight, so teams can scale automation without gambling with customer experience or trust.

The Silent Automation Failure Gap

Automation doesn’t usually break with a “System Down” alert. It breaks quietly.

Standard bots thrive on predictability. They fail when conversations become emotional, ambiguous, or multi-layered. Because there is no “red alert” for a frustrated customer, these failures go unnoticed until they show up in your churn metrics, and erosion of brand trust.

This is what silent failure looks like:

- The Loop: The bot repeats “I didn’t quite get that” until the customer gives up.

- The Misclassification: A high-stakes dispute is treated like a routine billing query.

- The Ghosting: A customer realizes the bot is stuck and simply leaves the brand forever.

This gap between bot logic and human need is the single biggest blocker to scaling AI. AMCC is designed to close it.

Redefining the Operating Model: The Core Idea Behind AMCC

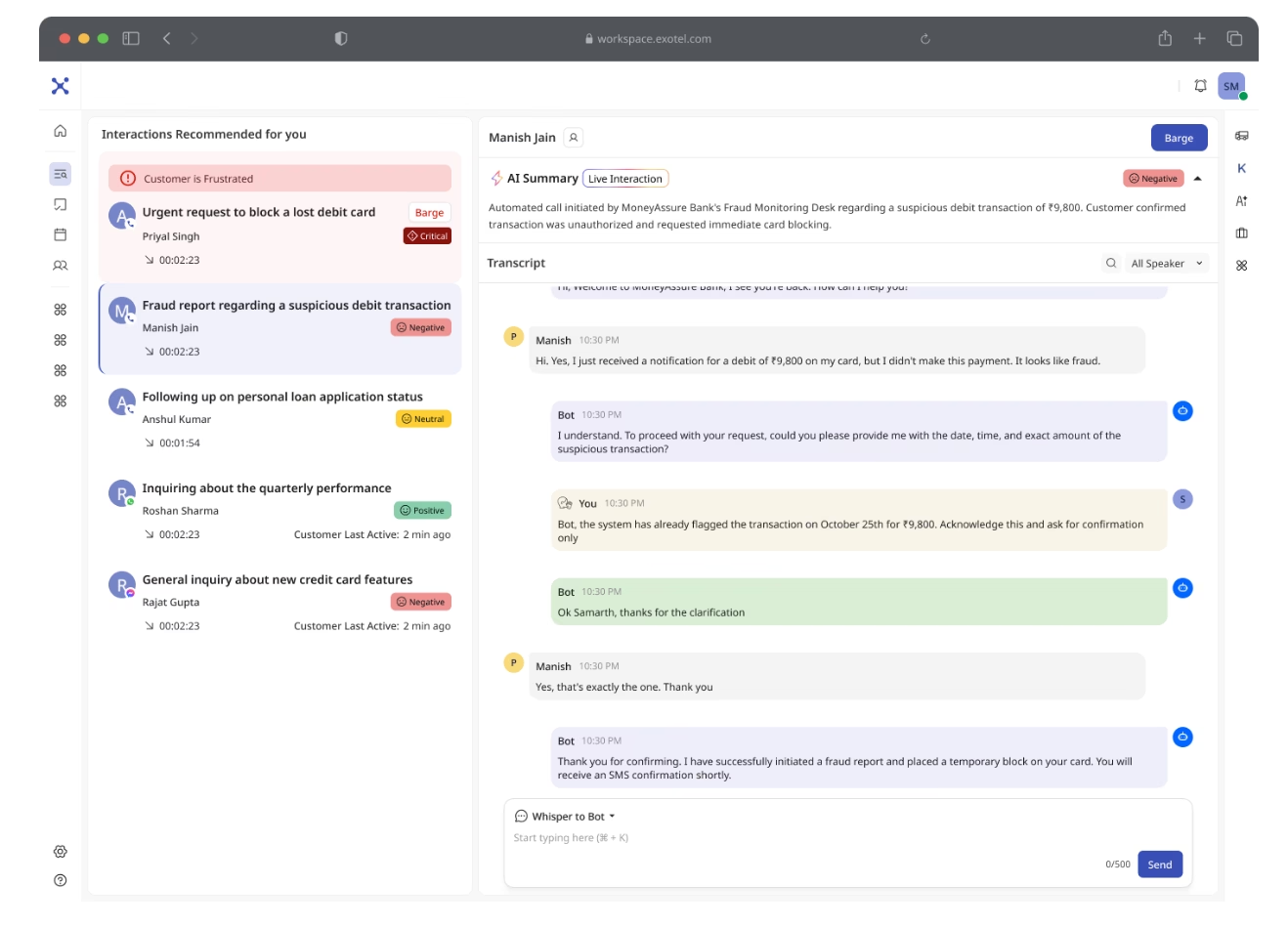

AMCC introduces an agent-monitored model for running AI in production.

Instead of treating bots as isolated tools, AMCC makes them part of a supervised human-in-the-loop system where human judgment is applied in real time. AI handles conversations by default. Humans oversee outcomes and intervene only when risk appears.

At the center of this model is a new role: the Monitoring Agent.

Monitoring agents don’t sit in queues answering calls. They supervise multiple live AI interactions from a centralized command center, watching for signals that indicate confusion, frustration, or failure.

The result is an agent-monitored contact center where:

- Bots handle the majority of conversations

- Humans monitor live interactions

- Intervention happens before experience breaks

This shift allows AI to scale while keeping humans exactly where they add the most value.

What “Agent-Monitored” Means in Practice

To move from 10% automation to 80% and beyond, teams need more than better bots. They need real-time supervision.

AMCC provides a dedicated supervision workbench where:

- Conversations are prioritized by risk, not sampled randomly. Sentiment drops, intent confusion, and stalled interactions rise to the top.

- Intervention is immediate and informed. Agents see live summaries, full transcripts, and customer context before stepping in.

- Takeover is seamless. Agents can whisper guidance, barge in, or takeover the conversation without breaking the customer experience.

- Fallbacks are guaranteed. If monitoring capacity is reached or it’s after hours, AMCC automatically routes to live agents or offers callbacks.

Why This Changes the Economics

The biggest breakthrough with the AMCC model is structural. In an agent-monitored model, scale comes from changing how humans are used.

In the AMCC model, AI handles the bulk, while a small, highly-skilled team of Monitoring Agents handles the exceptions. This allows a 100-seat contact center to operate with a fraction of the traditional headcount, without sacrificing CSAT. In fact, CSAT often rises because the “Human Safety Net” catches errors before the customer even feels them.

Human–AI Harmony, by Design

The future of AI isn’t about choosing between humans and machines. It’s about designing systems where each does what they’re best at.

AI brings speed, scale, and consistency. Humans bring judgment, empathy, and accountability. AMCC is where those strengths come together, not in competition, but in coordination.

By placing humans in the control layer and AI in the execution layer, AMCC creates a model where automation can grow without losing its human backbone. That balance is what makes scale sustainable.

Agent-less is reckless. AI-Human Harmony is how real progress happens.